SQL Scenario Based Interview Questions and Answers

Categories:

Written by:

Written by:Vivek Sankaran

SQL scenario based interview questions to learn how to wrangle date time fields for Facebook data science interviews.

Date Time functions are a favorite of the interviewers. Timestamped data is ubiquitous. Bank Transactions, Food Orders, Trips Data or User Weblogs – you see time series data everywhere. Given that nature of the data - each timestamp contains fields of interest like minute, day of the week, month, etc, one can create complex problems with simple datasets.

In this article, we look at the various date time types in SQL. We will then solve SQL scenario based interview questions from recent Facebook interviews and illustrate how to use the various datetime manipulation functions in SQL. We will also look at scenarios where you can use them and when not to.

SQL Datetime concepts and functions frequently tested in Facebook SQL Scenario Based Interview Questions

Some of the most tested SQL datetime function groups in Facebook SQL scenario based interview questions include.

- Understanding the difference between different datetime variable types in SQL

- Manipulation of interval, datetime and timestamp columns

- Subsetting by date and time ranges

- Extracting part of the datetime fields

The most common functions used for these data wrangling tasks are

- TIMESTAMP to convert a text (or string) to datetime type

- TO_CHAR() to convert datetime field to text

- EXTRACT() to get a part of the timestamp and date time columns

In these examples we will also look at the other SQL concepts like

- Window functions

- Common Table Expressions (CTEs)

- Joins on multiple tables

- Subqueries

- Data type conversion using CAST statement

The StrataScratch platform has many more such SQL interview questions that you can practice and ace your next interview. In this article, we look at these techniques in detail.

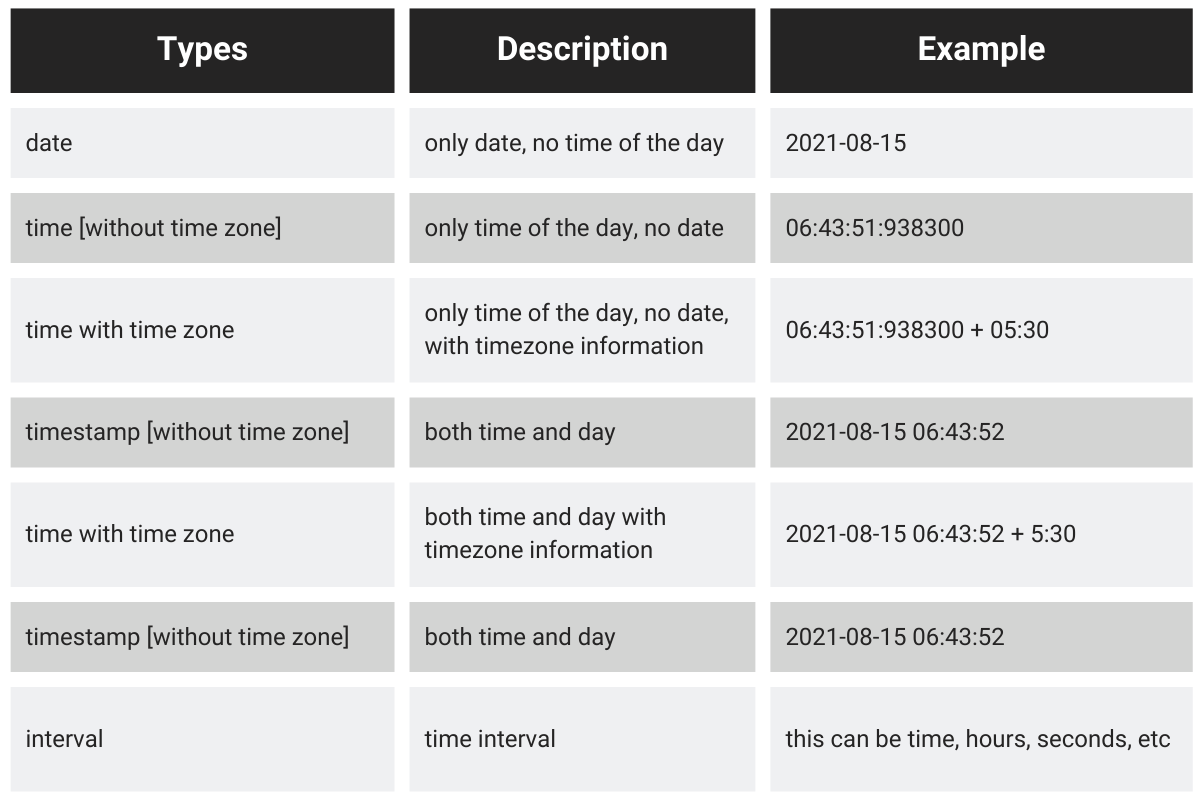

Date Time Variable Types

Most SQL implementations (SQLite being a notable exception) support different Date Time types. The common types available are:

date: contains only the date, not time of the day. For example: 2021-08-15

time: contains only the time of the day, not the date. By default, it is without a time zone identifier.

time with time zone: adds the additional time zone information for the time data type.

timestamp: Contains the full information date and time. By default, it is without a time zone identifier.

timestamp with time zone: adds the time zone information to for the timestamp data type.

interval: This is the difference between two date time type fields.

We need to be cognizant of the type of information contained in the field and the information requested in the output. Depending on the type of the variable, we might have access to different SQL date time functions specific to those date time fields. Example, you may not get the desired output from fields that have only dates if you want an hour by hour precision.

Let us use these datetime SQL functions on Facebook SQL scenario based interview questions. You can practice these and many such questions on the StrataScratch Platform. Let's warm up with an easy one.

SQL Scenario Based Interview Questions

SQL Scenario Based Interview Question #1: Number of Comments Per User in Past 30 days

Last Updated: January 2021

Return the total number of comments received for each user in the 30-day period up to and including 2020-02-10. Don't output users who haven't received any comment in the defined time period.

Table

You can solve this SQL scenario based interview question here: https://platform.stratascratch.com/coding/2004-number-of-comments-per-user-in-past-30-days

Below is the video if you want to check the video solution for this question:

Data View

This is a relatively straightforward dataset.

user_id: is the identifier of the Facebook account.

created_at: is the comment date

number_of_comments: represents the number of comments by the user on that day

Logic

To solve this, we can do the following.

- The current date is given (2020-02-10). Therefore, we take the difference of created_at field with the current date.

- Take only the comments that fall in the 30-day window.

- Aggregate the number of comments by user_id.

Solution:

Let’s code our logic.

1. We start off by taking the difference from the created_at field with the reference date (2020-02-10). We can use the TIMESTAMP function to convert a string to a timestamp and directly perform mathematical calculations.

select *, timestamp '2020-02-10' - created_at as date_diff

from fb_comments_count;The date_diff field is an interval with the default precision (In this case seconds)

2. We can now subset this difference using the INTERVAL function to get the desired subset of comments. The INTERVAL function converts a text into an interval data type. This will help us compare the values as if they were numbers.

select *, timestamp '2020-02-10' - created_at as date_diff

from fb_comments_count

where timestamp '2020-02-10' - created_at

between interval '0 days' and interval '30 days';3. Now we can aggregate the values by user_id and complete the query.

select user_id, sum(number_of_comments) as number_of_comments

from fb_comments_count

where timestamp '2020-02-10' - created_at between interval '0 days'

and interval '30 days'

group by user_id;Output

Let us crank things up a bit with the next one.

SQL Scenario Based Interview Question #2: Comments Distribution

Last Updated: November 2020

Write a query to calculate the distribution of comments by the count of users that joined Meta/Facebook between 2018 and 2020, for the month of January 2020.

The output should contain a count of comments and the corresponding number of users that made that number of comments in Jan-2020. For example, you'll be counting how many users made 1 comment, 2 comments, 3 comments, 4 comments, etc in Jan-2020. Your left column in the output will be the number of comments while your right column in the output will be the number of users. Sort the output from the least number of comments to highest.

To add some complexity, there might be a bug where an user post is dated before the user join date. You'll want to remove these posts from the result.

Tables

You can solve this SQL scenario based interview question here: https://platform.stratascratch.com/coding/10297-comments-distribution

Dataset View

This problem uses two datasets

fb_comments

fb_users

The relevant fields for our problem are

- user_id and created_at fields from the fb_comments table. These will be used to identify the user and the timestamp of her comment.

- id and joined_at fields from the fb_users table. These will be used to identify the joining date for a user.

Now that we have a handle of the data, let us try to build the logic to solve the SQL scenario based interview question.

Logic

- The two data sets must be merged. The join keys are user_id in the fb_comments table and id in the fb_users table.

- Subset the merged tables

- Keep only the users whose join dates are from 2018 to 2020

- Filter comments for Jan 2020

- Remove the users who posted before they joined

- Summarize comments by user_id and then the users by number of comments

Solution

This is one of the hard level SQL scenario based interview questions and slightly longer than the previous one. We will use SQL CTEs to reuse the queries whenever possible.

1. Let us start off by merging the two tables. We will keep only the relevant fields. user_id and created_at fields from the fb_comments table and id and joined_at fields from the fb_users table. We use INNER JOIN to ensure we take users with both comments as well as joining dates available.

with user_comments as

(

select a.joined_at, b.user_id, b.created_at

from fb_users a inner join fb_comments b

on a.id = b.user_id

)

select * from user_comments;2. We now subset the dataset to keep only the users and comment dates that satisfy the problem criteria

a) Keep only the users whose join dates are from 2018 to 2020 – To accomplish this we could use the TIMESTAMP function as earlier. Or we can use the EXTRACT function. The EXTRACT function is used to get only a part of a datetime field like hours, seconds, time zone, etc. Here we will take only the year since we want the users who joined between 2018 and 2020.

with user_comments as

(

select a.joined_at, b.user_id, b.created_at

from fb_users a inner join fb_comments b

on a.id = b.user_id

WHERE

EXTRACT(YEAR FROM a.joined_at) IN (2018, 2019, 2020)

)

select * from user_comments;

b) Filter comments for Jan 2020: To do this we can use the TIMESTAMP function as earlier or EXTRACT year and month separately. Alternatively, we can also use the TO_CHAR() function. The TO_CHAR() function converts a numeric value like integer or floating point values or a quasi-numeric field like datetime fields to a character expression. Here we extract the Month and Year from the comment date (created_at field) and subset comments for January 2020.

with user_comments as

(

select a.joined_at, b.user_id, b.created_at, to_char(b.created_at,

'MONYYYY') as month_yr

from fb_users a inner join fb_comments b

on a.id = b.user_id

WHERE

EXTRACT(YEAR FROM a.joined_at) IN (2018, 2019, 2020)

AND to_char(b.created_at, 'MONYYYY') = 'JAN2020'

)

select * from user_comments;c) Remove the users who posted before they joined. We can add this by simply keeping only the records where the comment date is after the date that the user joined.

with user_comments as

(

select a.joined_at, b.user_id, b.created_at, to_char(b.created_at,

'MONYYYY') as month_yr

from fb_users a inner join fb_comments b

on a.id = b.user_id

WHERE

EXTRACT(YEAR FROM a.joined_at) IN (2018, 2019, 2020)

AND to_char(b.created_at, 'MONYYYY') = 'JAN2020'

AND a.joined_at <= b.created_at

)

select * from user_comments;3. Now that we have all the relevant records, let us summarize. We need to get the number of users for each number of comments. How many users made 1 comment, 2 comments, 3 comments, 4 comments, etc.

a) We first summarize the number of comments for each user

with user_comments as

(

select a.joined_at, b.user_id, b.created_at, to_char(b.created_at,

'MONYYYY') as month_yr

from fb_users a inner join fb_comments b

on a.id = b.user_id

WHERE

EXTRACT(YEAR FROM a.joined_at) IN (2018, 2019, 2020)

AND to_char(b.created_at, 'MONYYYY') = 'JAN2020'

AND a.joined_at <= b.created_at

),

summ_comments as

(

select user_id, count(*) as num_comments

from user_comments

group by user_id

)

select * from summ_comments;b) Now we summarize this output counting the number of users on the number of comments. We arrange this in the ascending order of the number of comments and we have the final output.

with user_comments as

(

select a.joined_at, b.user_id, b.created_at, to_char(b.created_at,

'MONYYYY') as month_yr

from fb_users a inner join fb_comments b

on a.id = b.user_id

WHERE

EXTRACT(YEAR FROM a.joined_at) IN (2018, 2019, 2020)

AND to_char(b.created_at, 'MONYYYY') = 'JAN2020'

AND a.joined_at <= b.created_at

),

summ_comments as

(

select user_id, count(*) as num_comments

from user_comments

group by user_id

)

select num_comments, count(*) as num_users

from summ_comments

group by num_comments

order by num_commentsOutput

In this case we treated the datetime fields both as numeric values as well as a text string using SQL Datetime functions. To finish things off, let us try a difficult level problem involving datetime functions.

Find more advanced level Facebook data scientist interview questions here.

SQL Scenario Based Interview Question #3: Time Between Two Events

Last Updated: July 2018

Meta/Facebook's web logs capture every action from users starting from page loading to page scrolling. Find the user with the least amount of time between a page load and their scroll down. Your output should include the user id, page load time, scroll down time, and time between the two events in seconds.

Table

You can solve this SQL scenario based interview question here: https://platform.stratascratch.com/coding/9784-time-between-two-events

Dataset View

The dataset is relatively straightforward. We have a transaction record for each user with the user action and the timestamp. We need all three fields for solving the problem. Let us build the logic first.

Logic

- We need the earliest timestamp for page load time and scroll down time.

- Take the difference of these two timestamps in seconds

- Output the user with the smallest difference.

Solution

This is one of the hard level SQL scenario based interview questions. While the dataset is simple, the query has multiple layers to it. Let us implement this in parts. We will again use CTEs so that we can reuse the queries.

1. Get the earliest page load time and scroll down time for each user. We can remove the other actions as they are not relevant to the query.

with first_actions as (

select user_id, action, min(timestamp) as earliest_ts

from facebook_web_log

where action in ('page_load', 'scroll_down')

group by user_id, action

order by user_id, action

)

select * from first_actions;2. We have two values per user. We can now do a sub query by action and merge by user_id or split the CTE into two CTEs and then merge. Enter the CASE statement.

a) Since we need to take the difference between the timestamps, instead of taking individual minima, we can use the CASE statement to conditionally create two timestamp variables

with first_actions as (

select user_id, action,

(CASE

WHEN action = 'page_load' THEN timestamp

ELSE NULL

END) as page_load_ts,

(CASE

WHEN action = 'scroll_down' THEN timestamp

ELSE NULL END) as scroll_down_ts

from facebook_web_log

where action in ('page_load', 'scroll_down')

)

select * from first_actions;

b) We can now take the minimum of the two timestamp fields in the same query with the MIN() function and group by user_id. SQL will treat the quasi-numeric datetime fields as if they were numbers.

with first_actions as (

select user_id,

MIN(CASE

WHEN action = 'page_load' THEN timestamp

ELSE NULL

END) as page_load_ts,

MIN(CASE

WHEN action = 'scroll_down' THEN timestamp

ELSE NULL END) as scroll_down_ts

from facebook_web_log

where action in ('page_load', 'scroll_down')

group by user_id

order by user_id

)

select * from first_actions;3. We can now take the difference between the two timestamps. Since we need the difference in seconds, we need to convert the difference to a time type output rather than an interval type. We can use the CAST function to do it.

with first_actions as (

select user_id,

MIN(CASE

WHEN action = 'page_load' THEN timestamp

ELSE NULL

END) as page_load_ts,

MIN(CASE

WHEN action = 'scroll_down' THEN timestamp

ELSE NULL END) as scroll_down_ts

from facebook_web_log

where action in ('page_load', 'scroll_down')

group by user_id

order by user_id

)

select * from first_actions;4. We can now take the lowest value by sorting and limiting the output

with first_actions as (

select user_id,

MIN(CASE

WHEN action = 'page_load' THEN timestamp

ELSE NULL

END) as page_load_ts,

MIN(CASE

WHEN action = 'scroll_down' THEN timestamp

ELSE NULL END) as scroll_down_ts

from facebook_web_log

where action in ('page_load', 'scroll_down')

group by user_id

order by user_id

)

select *, CAST(scroll_down_ts - page_load_ts AS time) as time_diff

from first_actions

order by time_diff

limit 1;Output

SQL Scenario Based Interview Question #4: Users by Average Session Time

Last Updated: July 2021

Calculate each user's average session time, where a session is defined as the time difference between a page_load and a page_exit. Assume each user has only one session per day. If there are multiple page_load or page_exit events on the same day, use only the latest page_load and the earliest page_exit. Only consider sessions where the page_load occurs before the page_exit on the same day. Output the user_id and their average session time.

Table

Link to the question: https://platform.stratascratch.com/coding/10352-users-by-avg-session-time

Data View:

All three fields are relevant for our problem.

Logic

- Find the duration of user sessions for each day.

- Group rows by user_id value. Each group will have records that describe the sessions of a specific user.

- Find the average duration of users’ sessions.

Solution

1. Available data shows that a user can have multiple sessions on different days. So in order to calculate the average duration for each user, first, we need to measure the session duration for each day.

Each record in the facebook_web_log table represents one user action. To calculate the duration, we need to work with two actions - page_load and page_exit simultaneously. So we need two copies of the action data.

We can INNER JOIN the table with itself on a shared dimension - user_id values.

We use the WHERE clause with three conditions to filter rows in the combined table. From the first copy of the actions table, we need to keep only ‘page_load’ actions. From the second copy, we should keep only ‘page_exit’ actions. The third condition is logical - ‘page_exit’ should happen after ‘page_load’.

At this time, we need to find the length of the session for each individual day. We should group rows by unique pairs of user_id and date values. In the SELECT statement, we cast timestamp values to generate date values.

The question specifically tells us to find the duration between the latest page load and the earliest page exit. In the SELECT statement, we use min() and max() aggregate functions to measure the duration of a user session.

We use max() to find the latest ‘page_load’ and min() to find the earliest ‘page_exit’. We need to find the difference between these two values and save it as ‘session_duration’.

Finally, we use the WITH clause so that we can reference the resulting dataset as all_user_sessions.

with all_user_sessions as

(select t1.user_id,

t1.timestamp::date as date,

min(t2.timestamp::timestamp) - max(t1.timestamp::timestamp) as session_duration

from facebook_web_log t1

join facebook_web_log t2 on t1.user_id = t2.user_id

where t1.action = 'page_load'

and t2.action = 'page_exit'

and t2.timestamp > t1.timestamp

group by 1,

2)2. Once we have information about user sessions on each day, we need to SELECT and create groups for each user_id. Records in each group will describe the sessions of a specific user.

We use the AVG() aggregate function to find the average duration of sessions for the user.

with all_user_sessions as

(select t1.user_id,

t1.timestamp::date as date,

min(t2.timestamp::timestamp) - max(t1.timestamp::timestamp) as session_duration

from facebook_web_log t1

join facebook_web_log t2 on t1.user_id = t2.user_id

where t1.action = 'page_load'

and t2.action = 'page_exit'

and t2.timestamp > t1.timestamp

group by 1,

2)

select user_id,

avg(session_duration)

from all_user_sessions

group by user_idThe final output should look like this:

SQL Scenario Based Interview Question #5: Dates of Inspection

Last Updated: April 2018

Find the latest inspection date for the most sanitary restaurant(s). Assume the most sanitary restaurant is the one with the highest number of points received in any inspection (not just the last one). Only businesses with 'restaurant' in the name should be considered in your analysis.

Output the corresponding facility name, inspection score, latest inspection date, previous inspection date, and the difference between the latest and previous inspection dates.

Order the records based on the latest inspection date in ascending order.

Table

Link to the question: https://platform.stratascratch.com/coding/9714-dates-of-inspection

Data View

To answer this question, we need the following values:

facility_name: We find restaurants by looking at facility_name values. They must contain the text ‘restaurant’.

score: To answer this question, we should output restaurants that received the highest score. We should include specific score values as well.

activity_date: The question asks us to return the latest inspection date, the previous inspection date, and the difference between them. We need to work with activity_date values to find these values.

Logic

- Identify the most sanitary restaurants

- Calculate the previous inspection date and the difference between the latest and previous inspections

- Output specified values and order records

Solution

1. First, let’s find the latest inspection date for all records. The simplest approach is to write a subquery with two values: facility_name and activity_date.

We create this subquery to find the latest inspection date. To do this, we group records by facility_name values and use the max() aggregate function to find each group's highest (latest) inspection date.

select facility_name,

max(activity_date) as max_date

from los_angeles_restaurant_health_inspections

group by facility_nameWe can add the latest date (the result of max() aggregate function) to the main table by performing an INNER JOIN. The main table and the subquery should be combined on a shared dimension - facility name.

SELECT *

from los_angeles_restaurant_health_inspections as la

inner join

(select facility_name,

max(activity_date) as max_date

from los_angeles_restaurant_health_inspections

group by facility_name) a on la.facility_name = a.facility_nameSo far, we have all rows from the los_angeles_restaurant_health_inspections table. But many of the records do not represent restaurants.

According to the question description, a facility can be classified as a restaurant only if its facility_name value contains the text ‘restaurant’. We need an additional WHERE clause to remove rows that do not meet these criteria.

There is one more condition - we should only consider restaurants with the highest inspection scores. So our WHERE clause should have two conditions.

We use the ILIKE logical operator to set the first condition - facility_name should contain ‘restaurant’ text. AND logical operator chains this condition with another one - the score value should be equal to the highest score in the entire table.

This is necessary because the question specifies that we only want restaurants with the highest scores. To get the highest score, we write a short subquery where we use the max() aggregate function to return the highest score value in the entire table.

select *

from los_angeles_restaurant_health_inspections as la

inner join

(select facility_name,

max(activity_date) as max_date

from los_angeles_restaurant_health_inspections

group by facility_name) a on la.facility_name = a.facility_name

where la.facility_name ilike '%restaurant%'

and score =

(select max(score)

from los_angeles_restaurant_health_inspections)So far, our query returns restaurants with the highest score:

As you can see, all facilities have the text ‘restaurant’ in their facility_name and score of 100.

This table has many columns. In the preview, you need to scroll all the way to the right to see the max_date column. Values in this column refer to the latest inspection date.

2. Next, we need to work with available data to create two values: the date of the previous inspection (previous from the latest) and the difference between the latest and previous inspections.

Each record in the table describes one inspection. It’s safe to assume that a restaurant can have multiple inspection records.

To facilitate finding the previous inspection, we should list all inspections for a specific restaurant and order them ascendingly.

Because dates are ordered in ascending order (earlier ones first, followed by later inspections), the previous inspection is simply the activity_date value of the previous row.

To follow this approach, we need to essentially ‘group’ inspection records for each restaurant and arrange them in ascending order by their activity_date values.

Window functions are the perfect SQL feature for this task. They can be used to generate a new value for each row in the partition, which is essentially a group of rows for every unique facility_name value. We can arrange inspections by their date in ascending order in each partition.

Finally, we need to create a new value for the current row by taking the activity_date row of the previous row. Fortunately, there is a LAG() window function in SQL to do just that. It creates a new column but offsets each value by one row.

Learn more about LAG() and window functions here.

The difference between the inspection date and the previous one is easier to find. Simply cast activity_date to a date type and subtract the previous date.

Finally, we have every value we need - facility name, inspection score, latest inspection date, previous inspection date, and the difference between high-scoring inspections.

We should use the WITH clause to save the query as a common table expression so we can SELECT these values in the final step.

with cte as

(select la.facility_name,

score,

activity_date,

max_date,

lag(activity_date, 1) over(partition by la.facility_name

order by activity_date) as prev_activity_date,

activity_date :: date - lag(activity_date :: date, 1) over(partition by la.facility_name

order by activity_date) as number_of_days_between_high_scoring_inspections

from los_angeles_restaurant_health_inspections as la

inner join

(select facility_name,

max(activity_date) as max_date

from los_angeles_restaurant_health_inspections

group by facility_name) a on la.facility_name = a.facility_name

where la.facility_name ilike '%restaurant%'

and score =

(select max(score)

from los_angeles_restaurant_health_inspections))3. Finally, we can SELECT all the necessary values from the common table expression. The question tells us to return records for only the latest inspections.

We can use an additional WHERE clause with one condition - inspection date (activity_date column) should be the same as max_date (the latest date).

with cte as

(select la.facility_name,

score,

activity_date,

max_date,

lag(activity_date, 1) over(partition by la.facility_name

order by activity_date) as prev_activity_date,

activity_date :: date - lag(activity_date :: date, 1) over(partition by la.facility_name

order by activity_date) as number_of_days_between_high_scoring_inspections

from los_angeles_restaurant_health_inspections as la

inner join

(select facility_name,

max(activity_date) as max_date

from los_angeles_restaurant_health_inspections

group by facility_name) a on la.facility_name = a.facility_name

where la.facility_name ilike '%restaurant%'

and score =

(select max(score)

from los_angeles_restaurant_health_inspections))

select facility_name,

score,

activity_date,

prev_activity_date,

number_of_days_between_high_scoring_inspections

from cte

where activity_date = max_dateHere’s how the expected output should look like.

Conclusion

While solving these SQL scenario based interview questions we looked at the different ways in which you can manipulate the datetime fields in SQL. We saw the difference between the different types and when to use each type. We also converted a datetime field to a text and vice versa using the TO_CHAR() and TIMESTAMP functions. We also worked on problems that used only a part of the timestamp.

Datetime manipulation is a favorite of interviewers because timestamped data is omnipresent. Using specific datetime functions can help you save a lot of time that would be otherwise wasted in multiple subqueries. You too can master SQL Date time functions like these and many other SQL functions on StrataScratch. We have a community of over 20,000 like-minded data science enthusiasts. Join today and give yourself the best chance of acing Data Science Interviews at FAANG and other top companies.

Share