Advanced SQL Interview Questions You Must Know How to Answer

Categories:

Written by:

Written by:Nathan Rosidi

Advanced SQL interview questions that every data scientist should know. It is quite important to understand what is being tested and how you can prepare for it.

The importance of SQL in any data-related job can never be overstated. It is widely regarded as the go-to solution for many problems data scientists encounter on a daily basis. It is no surprise then to find out that advanced SQL interview questions or problems are still being asked in many interviews for data-related roles.

Since advanced SQL problems are being asked on data-related interviews, it is quite important to understand what is being tested and how you can prepare for a data science interview. We have gathered some advanced SQL interview questions asked by real companies in 2021 that we wanted to share and help you answer them. That way you will be all set for your next data interview where you will more than likely be asked some of these SQL interview questions. We have listed all the problems below, with some solutions as well as guidance that will lead you in the right direction when you do these problems yourself.

Advanced SQL Interview Questions from Redfin

SQL Interview Question #1: Rush Hour Calls

Question Link: https://platform.stratascratch.com/coding-question?id=2023

What we need to find out is how many customers have called more than 3 times between 3 PM and 6 PM, based on the data we have. This SQL interview question is regarded to be of medium difficulty. Basically, what the interviewers are testing for in this case is whether you can use the basic query statements to pull out certain data from columns and then use some more advanced clauses to match the data you pulled to some specific criteria in other columns.

Here is a short sample of the data being presented:

As you can see, there are 4 columns (one of them being the row identifier) which give you the timestamp of the call, how many calls the customer made and the duration of the calls.

Hint: You will not be needing all of the columns to answer the question.

Here is the solution:

SELECT count(*)

FROM

(SELECT DISTINCT request_id

FROM redfin_call_tracking

WHERE date_part('hour', created_on::TIMESTAMP) BETWEEN 15 AND 18

GROUP BY request_id

HAVING count(*)>=3) sqIf the code makes sense and you got something like this, great job.

As you can see from the code, and as we mentioned before, you would need to know basic query statements such as SELECT, FROM, WHERE and GROUP BY, but also some less-famous clauses such as DISTINCT, GROUP BY and HAVING. The reason for using these is that the task at hand requires us to pull a specific time frame from one column (created_on) which also needs to fit the criteria from request_id column about being unique (one customer per) and having more than 3 calls.

When it comes to this question, the part where most people get confused is usually not knowing these concepts, but being able to organize it in a logical flow and remembering all the steps while being in a high-pressure situation such as an interview. The reason this advanced SQL interview question gets asked in interviews is to be able to test whether you can pull specific data that fits some criteria from one column, and then combine it with pulling specific data from other columns. In other words, they check whether you can do a query with multiple sub-queries included in your problem.

SQL Interview Question #2: Update Call Duration

Question Link: https://platform.stratascratch.com/coding-question?id=2022

Let us look at another problem from Redfin

Dataset:

So, the question here is, what is the average duration for all UPDATE calls. This question is also considered to be of medium difficulty. The reason this question gets asked is to find out whether you can partition one (or more) of your columns and then do a query on one of them. You might be asked to do a query on all the separated parts from the columns as well, but the process is the same.

Approach:

We will let you work on this one yourself, but here are some hints to help you get started:

- average all calls from the column that tracks calls in the database in your query;

- set some criteria in your query to pull specific rows from the id column;

- in these criteria, partition your data based on the values in request_id column

- run another sub-query where your request_id values are greater than 1 (meaning only update calls are captured).

SQL Interview Question #3: Initial Call Duration

Question Link: https://platform.stratascratch.com/coding-question?id=2021

The final problem by Redfin that we will examine in this article:

Dataset:

Slightly different request than before, here you are asked to find the average duration of INITIAL calls. This question is also of medium difficulty. The reason this question gets asked is to understand whether you can query only the first calls (identified as 1) from the request_id column.

Approach:

You can try doing this one yourself, and if you managed to complete the previous task, you can pretty much use the same process. However, we will give you some hints on how to do it faster:

- average all call durations in your query;

- set a sub-query that will only pull the minimum values from the request_id column (since all initial calls are marked with a value 1).

Advanced SQL Interview Questions from Postmates

SQL Interview Question #4: Customer Average Orders

Question Link: https://platform.stratascratch.com/coding-question?id=2013

Here is a simple interview question from Postmates:

What we are looking for is how many customers placed an order and what the average amount of that order is. The question difficulty is easy. Before we examine the solution, let’s look at the data set:

As you can see, there are several columns, including the ones for unique customer ID and order amount. What is being tested here is whether you can count all unique data points from one column and then do some sort of a simple mathematical operation from your count (in this case, it is the average amount).

Hint: Unique is the magic word!

Here is the solution:

SELECT count(DISTINCT customer_id),

avg(amount)

FROM postmates_ordersPretty simple code, right? Not to worry if you were struggling with this question, as there is a trick to it. When you look at the data above in more detail, especially the customer_id column, you will see that some customers have multiple orders (same id numbers repeating). This is why you need to use the DISTINCT function in your query before pulling the average amount, in order to get the right results.

The reason this question gets asked in interviews, even though it looks relatively simple, is to test your due diligence and attention to detail. If you have done your query without the DISTINCT clause, you would still get some result that looks like an average and you might not even know that you made a mistake because the number you were expecting is probably similar to what you got. However, if you have a habit of always examining your data to look for anything you might need to specify in your code, you will have no problem answering this question.

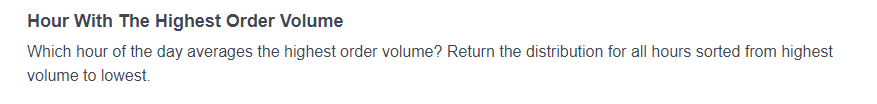

SQL Interview Question #5: Hour with The Highest Order Volume

Question Link: https://platform.stratascratch.com/coding-question?id=2014

Let’s look at another problem from Postmates, also being of easy difficulty:

Dataset:

The question here is which hour of the day averages the highest order volume. You also need to return the distribution of all hours sorted from highest volume to the lowest. The reason this question gets asked is to verify whether you can divide one column into periods (hours in this case), and then perform several mathematical operations once this division is done such as counting, pulling the average, finding the maximum value and ordering the values in descending order.

Approach:

You can work on this one yourself, and we will provide guidance as to how your code can look like:

- average the number of orders per hour in your query;

- divide your dataset into hourly increments based on the timestamp column (you can use date_part function);

- count the number of orders and group them into hourly increments;

- find the max value of your sub-queried hourly data;

- order the values in a descending fashion.

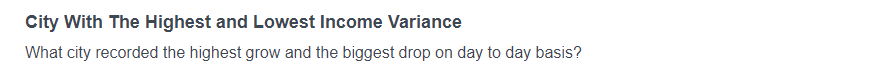

SQL Interview Question #6: City with The Highest and Lowest Income Variance

Question Link: https://platform.stratascratch.com/coding-question?id=2015

Another problem from Postmates, but this time it is medium difficulty:

Datasets:

The question being asked is what city recorded the highest grow and the biggest drop on a day-to-day basis. The reason this question is being asked is to identify your database manipulation skills, when you have two or more related databases which you can merge to perform operations on.

Approach:

Here is how you can solve this problem:

- identify the columns representing the city name from one table and city id from the other;

- query these columns as one value;

- create a new value for variation that will be the difference between two different dates in the dataset;

- create two sub-queries dedicated to calculating the sum of orders for two different days for each city (you will need to specify the exact dates from the timestamp column);

- difference between the two sums should be your variation value;

- pull minimum and maximum for your variation value.

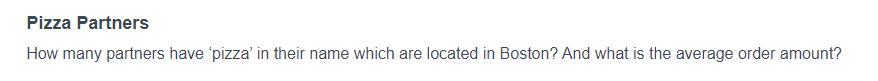

SQL Interview Question #7: Pizza Partners

Question Link: https://platform.stratascratch.com/coding-question?id=2016

Last interview question by Postmates that we will examine in this article, also being of medium difficulty:

Datasets:

So, the question here is how many partners have ‘pizza’ in their name which are located in Boston. Also, we need to find out the average order amount from these places. The reason this question is being asked is to further identify your data manipulation skills when the requested query includes varchar data type.

Approach:

Here is how you can solve the problem:

- select the name column from the table where all pizza partners are listed and average the amount column from the first table;

- join the orders table and pizza partners table using a common column identifier;

- make sure your sub-query includes the word ‘pizza’ in order to identify all partners with pizza in their name from your newly joined table;

- have a second, exclusive criteria in your sub-query where ‘Boston’ values will be returned;

- group the average values by the pizza partners’ names.

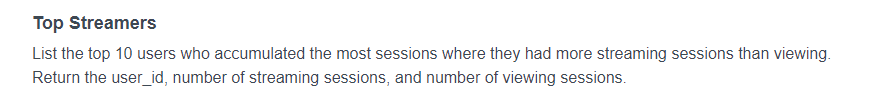

Advanced SQL Interview Questions from Twitch

SQL Interview Question #8: Top Streamers

Question Link: https://platform.stratascratch.com/coding-question?id=2010

Here is an interview question from Twitch:

We need to list the top 10 users who accumulated the most sessions where they had more streaming sessions than viewing as well as return the user_id, number of streaming sessions and number of viewing sessions. The question difficulty is medium. Before we examine the solution, let’s look at the dataset:

As you can see, there are several columns with different data types. What is being tested here is whether you can set appropriate conditional statements to get the data requested and whether you can use this conditional data to run queries and perform mathematical operations.

Here is the solution:

SELECT user_id,

count(CASE

WHEN session_type='streamer' THEN 1

ELSE NULL

END) AS streaming,

count(CASE

WHEN session_type='viewer' THEN 1

ELSE NULL

END) AS VIEW

FROM twitch_sessions

GROUP BY user_id

HAVING count(CASE

WHEN session_type='streamer' THEN 1

ELSE NULL

END) > count(CASE

WHEN session_type='viewer' THEN 1

ELSE NULL

END)

LIMIT 10As you can see, there are lots of conditional statements, as well as several other clauses in this query, so don’t worry if you were struggling with this one. You needed to count the number of times your conditional statements were true, group them by user_id and limit the results to top 10.

The reason this question gets asked at interviews is to test your knowledge of conditional statements as well as manipulation of the results gotten from the conditions. This is a very important area for practice as you will more than likely be using conditional statements on a day-to-day basis in your data job.

SQL Interview Question #9: Users with Two Statuses

Question Link: https://platform.stratascratch.com/coding-question?id=2009

Here is another interview question from Twitch, this time the difficulty is easy:

Dataset:

So, we need to find users who are both a viewer and a streamer. The reason this question gets asked is to identify whether you can find values from one column that satisfy multiple criteria from another column.

Approach:

Here is how you can solve this problem:

- start a query that gives user_id as output;

- group the results by user_id;

- count the number of results that have two distinct values in the session_type column.

SQL Interview Question #10: Session Type Duration

Question Link: https://platform.stratascratch.com/coding-question?id=2011

Another question from Twitch, also considered to be easy:

Dataset:

The question is, how do we calculate the average session duration for each session type? The reason this question gets asked is to verify whether you can do mathematical operations between two columns and group the results by criteria set in a third column.

Approach:

Here is how to solve the problem in a few simple steps:

- start a query that gives session_type as output;

- set a new variable that gives the average value when subtracting session end from session start column;

- group the results by session_type.

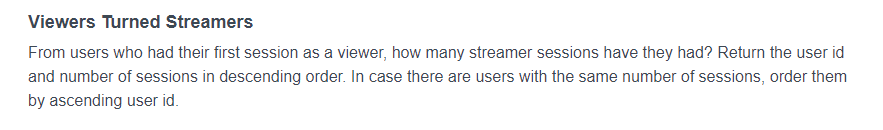

SQL Interview Question #11: Viewers Turned Streamers

Question Link: https://platform.stratascratch.com/coding-question?id=2012

Final question from Twitch that we will examine, and this one is considered to be of hard difficulty:

Dataset:

The question being asked is how many streamer sessions have users who had their first session as a viewer had. We need to return user id and number of sessions in descending order; if there are users with the same number of sessions, we need to order them by ascending order id. There are several concepts being tested here, which is why the question is considered to be hard. The reason this question is being asked is to find out whether you can do a more complex query in which you need to identify multiple criteria from multiple columns and then group the results by another set of criteria.

Approach:

Here are some steps you can follow to solve this problem:

- start a query that gives two outputs: user id and the number of sessions;

- set a criterion that the query should return results from streamers in the session_type column;

- start two sub-queries:

- one that gives user id as output;

- one that gives outputs for user id, session type and ranks session starts by individual users (you can set a new variable for this operation)

- once you have ranked session starts and separated them by users, finish your sub-query by selecting the lowest ranking value that also has viewer as session type;

- group the query by user id, order by the number of sessions in a descending order and by user id in an ascending order.

Check out this video to understand the top 5 coding concepts that companies will test you on in 2021.

Advanced SQL Interview Questions from Facebook

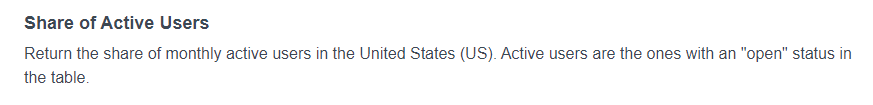

SQL Interview Question #12: Share of Active Users

Question Link: https://platform.stratascratch.com/coding-question?id=2005

Let’s look at an interview question from Facebook:

We need to find the share of monthly active users in the United States (US). We also have a further explanation that active users are the ones with an “open” status in the table. This question is of medium difficulty. Before we look at the solution, let’s examine the data:

As we can see, there are 4 columns of data that we can use. What the interviewers are trying to verify here is whether you can filter out appropriate values from two different columns and then count the number of results that are filtered out.

Here is the solution:

SELECT active_users /total_users::float AS active_users_share

FROM

(SELECT count(user_id) total_users,

count(CASE

WHEN status = 'open' THEN 1

ELSE NULL

END) AS active_users

FROM fb_active_users

WHERE country = 'USA') subqAs you can see, a new variable had to be created along with a conditional statement and some basic clauses in order to get the answer to this one. Not to worry if you did not get the result you wanted, as this question is not the easiest out there.

The reason this question gets asked at interviews is to test your knowledge of conditional statements, queries, creating new variables that represent a particular mathematical operation and counting the results.

SQL Interview Question #13: Users Activity Per Day

Question Link: https://platform.stratascratch.com/coding-question?id=2006

Another question from Facebook, and this one is considered to be easy:

Dataset:

What is being asked here is to return a distribution of users’ activity per day of the month; the solution should consist of a day number (between 1 and 31) and the number of users activity for the day. The reason this question being asked is to check whether you can query the date data and count the queried data appropriately.

Approach:

Here is how you can approach solving this problem:

- start a query that gives two outputs: filtered daily increments from the post_date column using the date_part function and counting the total number;

- grouping the results by the date_part output you specified in your query.

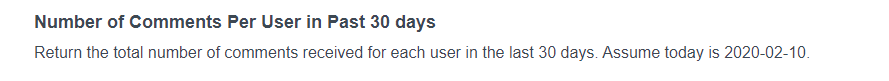

SQL Interview Question #14: 30 Day Commenting

Question Link: https://platform.stratascratch.com/coding-question?id=2004

Yet another question from Facebook, also considered to be of easy difficulty:

Dataset:

What we need to do here is to return the total number of comments received for each day in the last 30 days; we should also assume today is 10-02-2020. What is being tested here is whether you can manipulate the date column to only pull the results from a certain period as well as count the number of results in your query.

Approach:

One of the ways you can approach this solution:

- start a query that gives two outputs: user id and total number of comments per user;

- set a clause in your query to pull only values that are created between 10-02-2020 and 30 days before that date, and set an interval to 1 day;

- group the result by user id.

Check out our article SQL Scenario Based Questions and Answers to find the solution for this question.

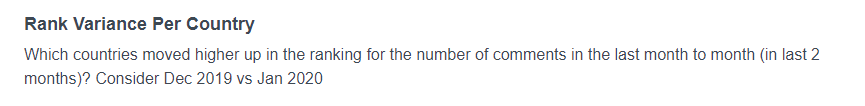

SQL Interview Question #15: Rank Variance Per Country

Question Link: https://platform.stratascratch.com/coding-question?id=2007

The final advanced SQL interview question from Facebook that we will examine in this article, and this one is considered to be hard:

Datasets:

The question being asked is which countries moved higher up the ranking for the number of comments in the last month to month (last two month). We need to consider December 2019 vs January 2020. Since this question is considered to be quite hard, it’s no surprise that there are several clauses and operations that need to be implemented in order to get the result. The reason this question is being asked on interview is to determine whether you can identify and join table using the common identifier, and perform numerous data manipulation activities on multiple columns.

Approach:

The steps you can follow to solve the problem:

- start a query that gives the county column as an output;

- start a sub-query that ranks the comments and orders them by the number of comments for December as well as January

- you can define two new variables here to help you separate December and January comments, and don’t forget to rank and order comments separately for each month;

- start another sub-query that splits the created_at column into monthly increments using the date_part function (you can define this as a new variable) and pulls a sum of comments for December;

- join the two tables using a common identifier (user_id column);

- set a clause that the data only need to be pulled if it was created between December 1, 2019 and December 31, 2019 and the country value is not empty;

- group the results by country and the month of December (using the date_part function);

- join December results with January results by repeating the last three steps and changing the values to reflect January 2020 criteria;

- set a final clause in your query to pull only the data where the value for January comments subtracted from December comments is greater than zero

- if you defined these variables separately as suggested in step 2, you can only use their names here.

Find more Facebook SQL questions here.

Advanced SQL Interview Questions from Credit Karma

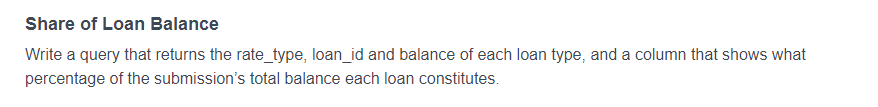

SQL Interview Question #16: Share of Loan Balance

Question Link: https://platform.stratascratch.com/coding-question?id=2001

Let us look at an interview question from Credit Karma this time:

We need to write a query that returns the rate_type, loan_id and balance of each loan type, and a column that shows what percentage of the submission’s total balance each loan constitutes. This question is considered to be medium difficulty. Before we examine the solution, let’s look at the data:

If we examine the table, we will see that there are 5 columns that we can use. What the interviewers are checking for when asking this question is whether you can write queries that will have multiple outputs and whether you can perform some operations on the expected outputs. Here’s a solution:

SELECT s1.loan_id,

s1.rate_type,

sum(s1.balance) AS balance,

sum(s1.balance)::decimal/total_balance*100 AS balance_share

FROM submissions s1

LEFT JOIN

(SELECT rate_type,

sum(balance) AS total_balance

FROM submissions

GROUP BY rate_type) s2 ON s1.rate_type = s2.rate_type

GROUP BY s1.loan_id,

s1.rate_type,

s2.total_balance

ORDER BY s1.rate_type,

s1.loan_id

You can see from the solution that there are 4 expected outputs that result from the query. You also had to perform joins, grouping and ordering by functions to get to the solution. Not to worry if you were struggling with this one, as it is considered to be medium difficulty.

The reason this question gets asked on interviews is to test your query preparation skills. On top of being able to perform all the operations and clauses from the solution, it was very important to know what types of outputs to expect from your query right away. This is a skill that majority of interviewers will appreciate.

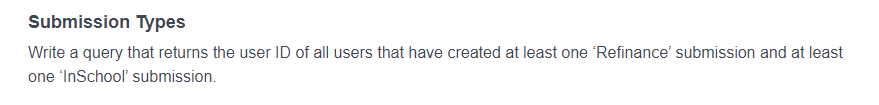

SQL Interview Question #17: Submission Types

Question Link: https://platform.stratascratch.com/coding-question?id=2002

Another question from Credit Karma, this time the question’s difficulty is easy:

Dataset:

The task at hand is to write a query that returns user ID of all users that have created at least one ‘Refinance’ submission and at least one ‘InSchool’ submission. The reason this gets asked on interviews is to be able to verify whether you can perform a query that has multiple distinct criteria.

Approach:

One of the ways you can answer this question:

- start a query that gives user id as output;

- set criteria that the type of data queried will be exactly Refinance and InSchool;

- set criteria that the queried data will have two (2) distinct types of data.

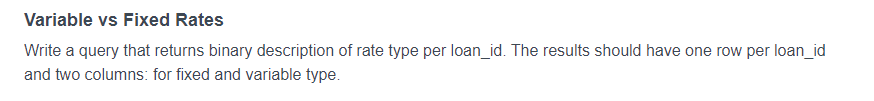

SQL Interview Question #18: Variable vs Fixed Rates

Question Link: https://platform.stratascratch.com/coding-question?id=2000

Let’s look at another question from Credit Karma, this time the question difficulty is medium:

Dataset:

What we need to do is write a query that returns binary description of date type per loan id. The results should have one row per loan id and two columns: one for fixed and one for variable type. This is being asked on interviews in order to test your knowledge on and ability to work with conditional statements within a query.

Approach:

Here is a way you can solve this question:

- start a query that gives 3 (three) outputs; loan id should be one of them;

- for the second output, count the number of times the word ‘fixed’ appears in the rate_type column for every loan id value, by using a conditional statement;

- for the third input, repeat all the steps from the second input with switching the keyword to ‘variable’;

- group the query by loan id.

SQL Interview Question #19: Recent Refinance Submissions

Question Link: https://platform.stratascratch.com/coding-question?id=2003

The final question from Credit Karma that we will examine. The difficulty level for this one is also medium:

Datasets:

What we are being asked here is to write a query that joins the submissions table to the loans table and returns the total loan balance on each user’s most recent ‘Refinance’ submission. We need to return all users and the balance for each of them. The reason this question is being asked on interviews is to find out whether you can identify and produce explicit values that fit multiple criteria in your query.

Approach:

Here is how you can try to solve the problem:

- start a query that gives user id and the sum of balance column as outputs;

- start a sub-query that, from the loans table, returns distinct id, user id, highest value from the created at column, and is partitional by type of rate;

- specify that the type of rate for the data to be partitioned by shall include the word ‘Refinance’;

- join the tables using id and loan_id columns;

- group the results by user id.

Advanced SQL Interview Questions from Ring Central

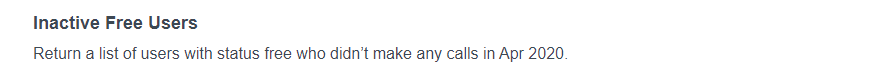

SQL Interview Question #20: Inactive Paid Users

Question Link: https://platform.stratascratch.com/coding-question?id=2018

Let’s look at some interview questions from Ring Central. This one has is considered to be easy:

Datasets:

What we are being asked is to return a list of free users who didn’t make any calls in April 2020. This question is designed to test whether you can correctly filter data based on a specific time period and handle missing activity through joins.

Approach:

One of the ways to solve this problem:

- Start by selecting distinct

user_idvalues from therc_userstable. - Use a LEFT JOIN with the

rc_callstable, joining onuser_id, and restrict the joined calls to those made between April 1 and April 30, 2020. - Filter for users with

status = 'free'. - Add a condition

c.user_id IS NULLto return only those free users who made no calls during April 2020.

SQL Interview Question #21: Paid Users in April 2020

Question Link: https://platform.stratascratch.com/coding-question?id=2017

Let’s examine another question from Ring Central, also considered to have easy difficulty:

Datasets:

The question being asked is how many paid users had any calls in April 2020. The reason this question gets asked on interviews is pretty much the same as the reason in the previous questions, since concepts being tested are quite similar. The interviewers are trying to determine whether you can set appropriate time period criteria in your query.

Approach:

One of the ways you can solve this problem:

- start a query that counts distinct user id values as output, from the calls table;

- set a condition that the dates to be queried should be between April 1 and April 30, 2020;

- set another condition that the status column value should be ‘paid’, from the users table.

SQL Interview Question #22: Top 2 Users with Most Calls

Question Link: https://platform.stratascratch.com/coding-question?id=2019

Another question from Ring Central, and the difficulty for this one is medium:

Datasets:

The task at hand is to return the top 2 users in each company that called the most. We should output the company_id, user_id, and the user’s rank; and if there are multiple users with the same rank, we need to keep all of them. You might be asked this type of question on an interview in order to determine whether you can construct a complex query with multiple outputs that requires of you to know how to keep values ranked on the same level on top of being able to perform numerous mathematical operations.

Approach:

Here is how you can try to answer this question:

- start a query that gives three outputs: company_id, user_id and rank (top contributors);

- start a sub-query that gives four outputs: the first two being company_id and user_id;

- third sub-query output should count the number of calls based on call_id from the calls table (you can define it as a new variable);

- last sub-query output should partition the values based on company_id column, order them by counting the call_id column in a descending order and rank the values in a way so it does not skip the number for similar values; this output’s values should reflect your rank output from the original query;

- join the two tables using the common column identifier;

- set criteria that only values ranked 2 or below should appear.

SQL Interview Question #23: Call Declines

Question Link: https://platform.stratascratch.com/coding-question?id=2020

Final question from Ring Central that we will look into. Difficulty level for this one is also medium, same as the question before:

Datasets:

The question here is which company had the biggest month decline in users placing a call from March to April 2020. We need to return the company id and calls variance for the company with the highest decline. The reason this question gets asked is to check whether you can run a complex query where you need to create new variables, identify and select certain time frames, and perform numerous logical and mathematical operations.

Approach:

Here is one of the ways you can try to solve this problem:

- start a query that gives four outputs: company id, March calls, April calls and a variation between March and April calls (last three outputs will be new variables); you can also create a separate table for this query as you are creating plenty of new variables;

- start a sub-query that will output company id and count the number of calls from call id column, as April calls (variable set earlier);

- join the two tables using the common column identifier;

- set criteria for the date column to only pull values between April 1 and April 30, 2020;

- group by company id;

- join this sub-query with another sub-query and repeat last 4 steps in your new sub-query, only with March values substituted for April;

- start a new query that gives two outputs: company id and calls variance (variable defined as part of your original query);

- set criteria in your new query to pull the minimum value from your calls variance variable.

Advanced SQL Interview Questions from Delta Airlines

SQL Interview Question #24: The Cheapest Airline Connection

Question Link: https://platform.stratascratch.com/coding-question?id=2008

For the final question in this article, we will look into an interview question from Delta Airlines. Difficulty level for this question is considered to be hard:

Dataset:

Here we have a task with lots of instructions that we need to comply with. The task is to produce a trips table that lists all the cheapest possible trips that can be done in two or fewer stops. The table should have the columns for origin, destination, number of stops and total cost. If two trips cost the same, but have different number of stops, we should include the one with the fewest stops. Output table should be sorted by origin, and then by destination.

Now let’s examine the data in more detail, so we can understand the task at hand better. We have 4 columns representing row id, origin airport, destination airport and cost of the trip. The airport names are abbreviated by their 3-letter codes. This table only shows the prices for one-way trips, but we will have to create and include prices of connecting trips as well in order to solve the problem. The reason this question gets asked on interviews is to test whether you can perform complex queries and whether you are able to create (and analyze) new sets of data from the existing dataset in order to find your solution.

Approach:

Here is how you can try to solve the problem:

- start a query that gives three outputs: origin, destination and minimum cost value;

- prepare a table containing all possible connections (up to 2) and flight costs by merging the dataset multiple times on these parameters;

- once you have all connections and flight costs calculated, run a sub-query to combine result sets from all possible connections;

- add parameters to make sure your destination and cost values are not zero;

- group the output by origin and destination.

Also, check out our Ultimate Guide to SQL Interview Questions that will take you through the top SQL questions for various data positions.

Share